Day 18

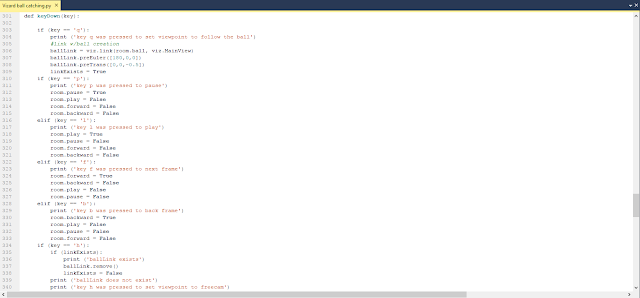

Today i hastily finished a portion of my structure draft in time to hand it in, but it is far from complete. The majority of the day consisted of more paper summaries but later i did finally switch it up by facilitating an experiment on Emily where we finally successfully collected the data we need. But unfortunately the data we reviewed was biased so it will have little to no use in any final analysis. The rest of the day i sat down with Kamran and began to decipher open-AI code and how we can use it in our 3D simulation.